In its most basic form, text-to-image models are able to convert natural language descriptions into visualizations, either as static images or dynamic animations. These systems fall under the category of generative AI.

Using image-based marketing is a key strategy for many businesses. However, it can be difficult to find the right image – especially when you’re looking for something specific.

How Text-to-Image Converters Work

A text-to-image converter uses a machine learning model to take in a natural language description and generate matching images. It falls under the umbrella of generative AI and is one of the most popular use cases for deep learning.

The model takes the input text and encodes it into a low-resolution bitmapped image. Then, it upscales that image using an auxiliary deep learning model to fill in details and improve quality. Most models use a recurrent neural network such as an LSTM, although transformer and diffusion models have also gained popularity in recent years.

There are many reasons why you might need to convert a picture into text. For example, you may need to scan articles or papers and convert them into an editable document. Or, you might want to repurpose images from social media in your work. With this online photo to text converter, you can execute this task quickly and accurately.

What is Text-to-Image Conversion?

In the world of text-to-image conversion, a model converts an input text description into an image that matches it as closely as possible. It falls under the umbrella term generative AI, which is one of the many ways that computers can imagine new things.

A growing ecosystem of tools and resources has emerged online for creating text-to-image conversions. Many are open source in executable notebooks on Google’s Colab service, which has been instrumental to the growth of the field. Innovators contribute to the community by developing new text-to-image generation models and techniques. Conservators work to preserve these innovations in, for example, GitHub repositories and on Google’s Colab platform.

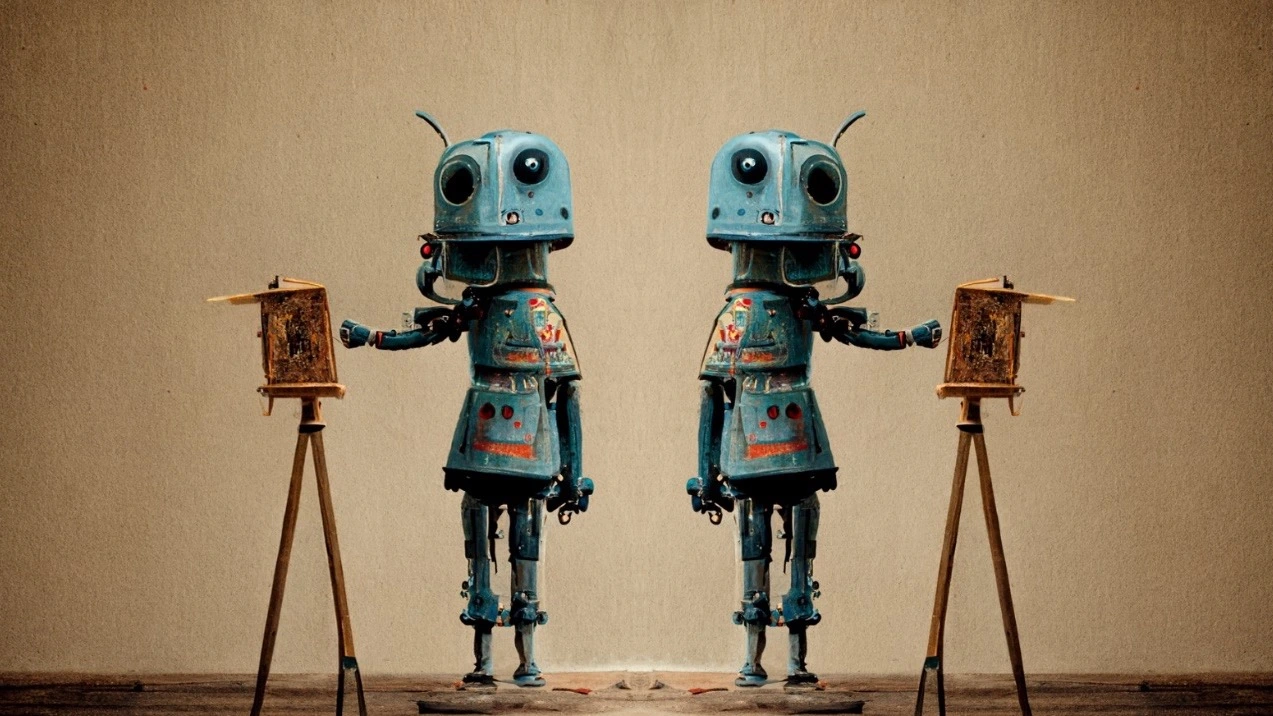

Text-to-image creation has reached a point of ubiquity where anyone can create digital images and artworks simply by providing prompts in natural language. This raises the question: Is such art creative? This paper explores the nature of creativity involved in text-to-image creation, examining the other three P in Rhodes’ model (person, process and press) to demonstrate how a product-centered view of creativity fails to adequately assess the extent to which such art is creative.

Why Is Text-to-Image Conversion Important?

The ability to convert images into text is useful in a variety of ways. For example, it can be used to help people with communication disorders by replacing pictures with words that they can understand better. It can also be used to aid in research by converting data images into text documents that can then be analyzed for trends and insights.

The process of converting text to images is accomplished by using a machine learning model that takes as input a natural language description and outputs an image matching that description. In the past, this was commonly done with a long short-term memory (LSTM) neural network, though more recently conditional generative adversarial networks and diffusion models have become popular options as well.

If you need to convert images into text for a project, it is a good idea to use an online tool like HiPDF or desktop applications like PDFelement. This will save you time and effort by letting you create your text document without needing to manually enter the information yourself.

How Text-to-Image Conversion Works

A text-to-image model takes a natural language description and produces an image that matches it. This type of machine learning model began to develop in the mid-2010s, as a result of advances in deep neural networks. A popular architecture is to use a recurrent neural network for the text encoding step, with a transformer model or a conditional generative adversarial network used for the image generation step.

A key challenge is bridging the semantic gap between text and images. This involves understanding the meaning of words in a text description, using them to find objects in an image, and then converting these meaningful object embeddings into an image.

To do this, a model first searches for a picture that contains one or more keywords from the text prompt. Then it filters out images with a high polysemy count, and finally retrieves a picture with a low ambiguity score. This process is repeated until the generated image resembles the intended target as closely as possible.

Leave a Reply